-

Human-AI Interaction for Augmented Reasoning

Improving Human Reflective and Critical Thinking with Artificial Intelligence

April 26, 2025 | 9:00-17:00 JST | CHI 2025, Yokohama, Japan

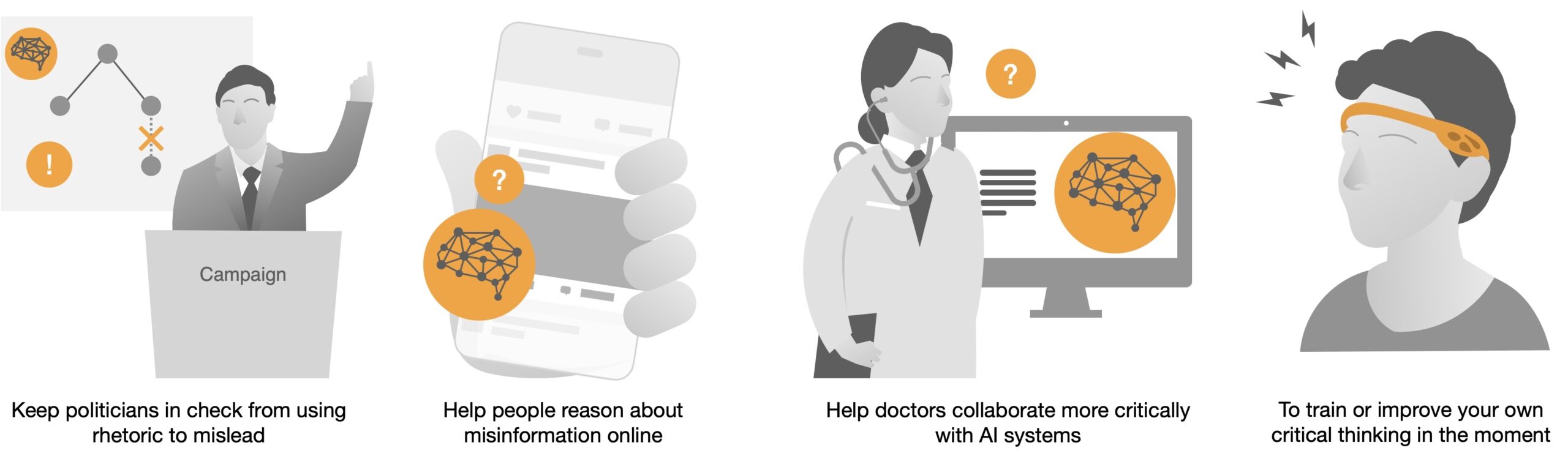

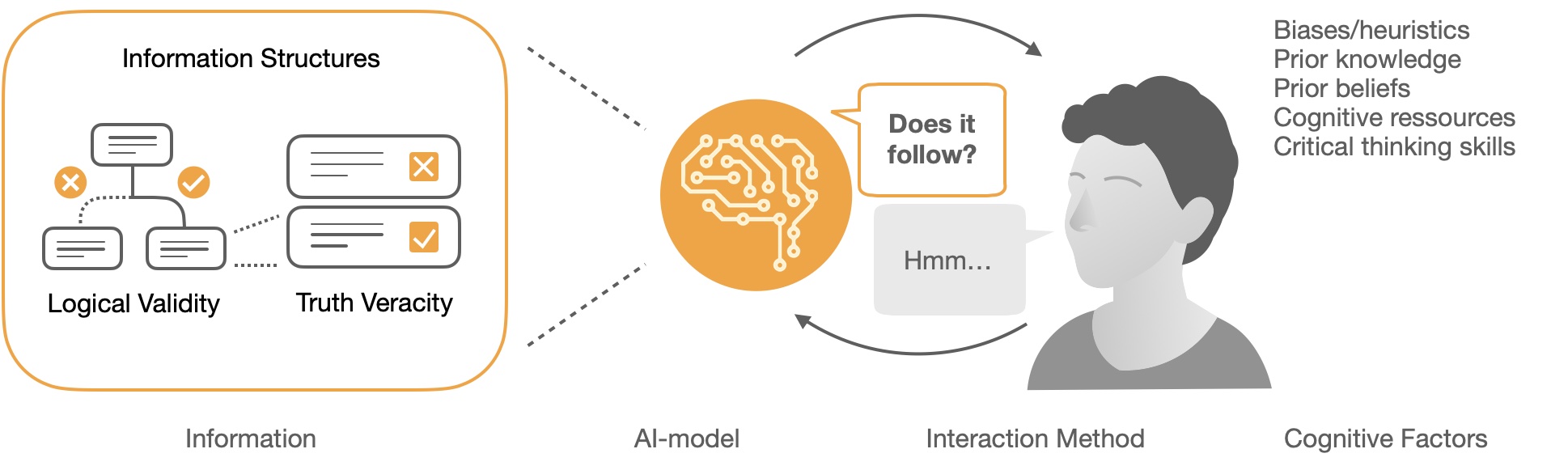

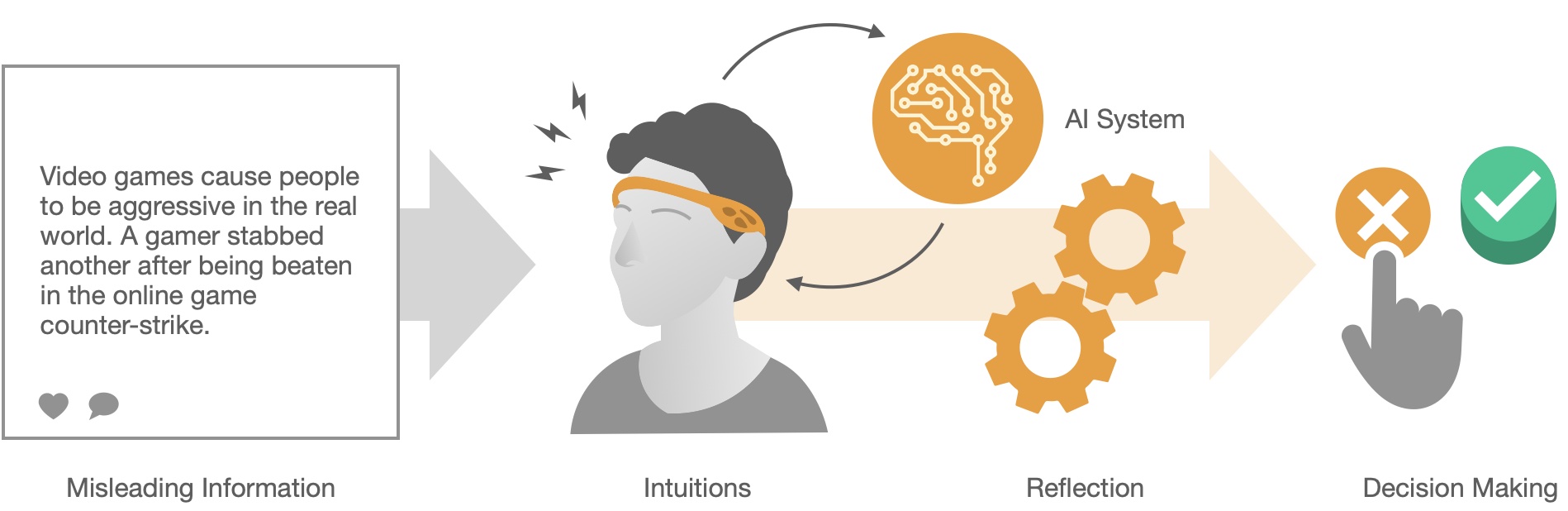

AI-Augmented Reasoning systems are cognitive assistants that support human reasoning by providing AI-based feedback that can help users improve their critical reasoning skills. Made possible with new techniques like argumentation mining, fact-checking, crowdsourcing, attention nudging, and large language models, AI augmented reasoning systems can provide real-time feedback on logical reasoning, help users identify and avoid flawed arguments and

misinformation, suggest counter-arguments, provide evidence-based explanations, and foster deeper reflection.

The goal of this workshop is to bring together researchers from AI, HCI, cognitive and social science to discuss recent advances in AI-augmented reasoning, to identify open problems in this area, and to cultivate an emerging community on this important topic.

-

What is AI Augmented Reasoning?

AI Augmented Reasoning refers to the application of artificial intelligence (AI) systems to support, facilitate and improve human reasoning capabilities by providing insights, identifying patterns, uncovering biases, and offering guidance that is intuitive to the user and which enables them to make better-informed decisions that they feel that they arrived at through their own thinking processes.

AI-enhanced reasoning systems differ from other AI information-processing systems in that they focus not only on providing accurate information or optimize decision outcomes but also on actively engaging users in reflective thinking or building strong, appropriate intuitions.Workshop Schedule

Opening & Welcome 9:00 AM Keynote 1

Thomas Costello: “The promise and peril of AI persuasion: Evidence from RCTs on contentious social and political beliefs”9:15 AM Keynote 2

Paolo Torroni: “Argumentation with Artificial Intelligence”10:00 AM Coffee Break and Poster Session 10:45 AM Panel on AI for Human Reasoning with Thomas Costello, Paolo Torroni, and Lama Ahmad. Moderator: Valdemar Danry. 11:15 AM Lightning Talks 1 11:45 AM Lunch Break 12:45 PM Lightning Talks 2 2:10 PM Coffee Break and Poster Session 3:10 PM Group Activity: Definitions, Goals, Applications and Methods 3:45 PM Next Steps and Closing Remarks 5:00 PM Keynote Speakers & Panelists

Dr. Thomas Costello

Thomas Costello is an Assistant Professor of Psychology at American University and Research Associate at the MIT Sloan School of Management. He studies where political and social beliefs come from, how they differ from person to person–and, ultimately, why they change–using the tools of personality, cognitive, clinical, and political science. He is best known for his work on (a) leveraging artificial intelligence to reduce conspiracy theory beliefs and (b) the psychology of authoritarianism. He has published dozens of research papers in peer-reviewed outlets, including Science, Journal of Personality and Social Psychology, Psychological Bulletin, and Trends in Cognitive Sciences. Thomas developed DebunkBot.com, a public tool for combatting conspiracy theories with AI.

Dr. Paolo Torroni

Dr. Paolo Torroni has been an associate professor at the University of Bologna since 2015. His primary research focuses on artificial intelligence, particularly in natural language processing, multi-agent systems, and computational logics. He authored over 180 scientific publications. He is the head of the Language Technologies Lab and past director of the Master’s Degree in Artificial Intelligence in Bologna and a visiting fellow at the European University Institute in Florence.

-

Proceedings

Lightning Talks

THE GOAL: Misalignment between the measured metrics and the nebulous concept of critical thinking in joint decision making [Link]

Elena Sergeeva, Anastasia Sergeeva.Insight Box: A Multi-agent AI assistant for detecting and addressing cognitive distortions [Link]

Ram Priyadarshini RamchandranCognitive Dissonance Artificial Intelligence (CD-AI): The Mind at War with Itself. Harnessing Discomfort to Sharpen Critical Thinking [Link]

Delia DeliuHuman-centered World Modeling: Enhancing Multi-Agent AI Adaptability with Chain-of-Thought and Symbolic Reasoning [Link]

Reza Habibi, Zhiyu Lin, Jiahong Li, Tejas Polu, Ashwin Nagarajan, Magy El-NasrEvaluating Eye Tracking and Electroencephalography as Indicator for Selective Exposure During Online News Reading [Link]

Thomas Krämer, Francesco Chiossi, Thomas KoschNavigating the State of Cognitive Flow: Context-Aware AI Interventions for Effective Reasoning Support [Link]

Dinithi Dissanayake, Suranga NanayakkaraAn Approach to Grounding AI Model Evaluations in Human-derived Criteria [Link]

Sasha MittsStrategic Reflectivism In Intelligent Systems [Link]

Nick ByrdSupporting Data-Frame Dynamics in AI-assisted Decision Making [Link]

Chengbo Zheng, Tim Miller, Alina Bialkowski, H Peter Soyer, Monika JandaShots and Boosters: Exploring the Use of Combined Prebunking Interventions to Raise Critical Thinking and Create Long-Term Protection Against Misinformation. [Link]

Huiyun Tang, Anastasia SergeevaFactually: Exploring Wearable Fact-Checking for Augmented Truth Discernment [Link]

Chitralekha Gupta, Suranga NanayakkaraBeyond static AI evaluations: advancing human interaction evaluations for LLM harms and risks [Link]

Lama Ahmad, Lujain Ibrahim, Saffron Huang, Markus AnderljungPosters Sessions

AI That Makes You Think: Designing Systems for Guided Reasoning and Reflection [Link]

Naile B. Hacioglu, Maria Chiara Leva, Hyowon LeeLessons from designing AI-augmented systems for scientific audiences [Link]

Hana Zaydens, Kirsty EwingEnhancing Critical Thinking with AI: A Tailored Warning System for RAG Models [Link]

Charlotte ZhuIntelligent Interaction Strategies for Context-Aware Cognitive Augmentation [Link]

Xiangrong (Daniel) Zhu, Yuan Xu, Tianjian Liu, Jingwei Sun, Yu Zhang, Xin TongMultimodal LLM Augmented Reasoning for Interpretable Visual Perception Analysis [Link]

Shravan Chaudhari, Trilokya Akula, Yoon Kim, Tom Blake

Promoting Real-Time Reflection in Synchronous Communication with

Generative AI [Link]

Yi Wen, Meng XiaDistributed Cognition for AI-supported Remote Operations: Challenges and Research Directions [Link]

Rune M. Jacobsen, Joel Wester, Helena Bøjer Djernæs, Niels van BerkelThe Impact of Precision of Algorithmic Advice on Human Sequential Decision-Making: Model and Experiments [Link]

Philippe Blaettchen, Wichinpong Park SinchaisriDeBiasMe: De-biasing Human-AI Interactions with Metacognitive AIED (AI in Education) Interventions [Link]

Chaeyeon Lim, Manni CheungAlgorithmic Mirror: Designing an Interactive Tool to Promote Self-Reflection for YouTube Recommendations [Link]

Yui Kondo, Kevin Dunnell, Qing Xiao, Dr. Luc RocherDesigning AI Systems that Augment Human Performed vs. Demonstrated Critical Thinking [Link]

Katelyn Xiaoying MeiCall for Participation

If you are interested in the workshop, please submit your application below for virtual or in-person participation. The submission deadline is March 2nd, 2025. We will notify the accepted participants by March 24th 2025. The list of participants will be posted on the workshop website.

At least one author of each accepted position paper must attend the workshop and all participants must register for at least one day of the conference. We will host accepted papers on the workshop’s website for participants and others to review prior to the meeting.

Registration Deadline:

March 2, 2025

Extended Deadline: March 9, 2025, AOEPeople interested in participating can apply by completing the form below indicating their disciplinary background and the nature of their interest in the topic, including:

- A link to a relevant design artifact

- Short Bio: Explain the type of background and/or expertise that you would bring to this workshop. Please include information about your role (e.g., student, faculty, industry, non-profit, etc.), your discipline (e.g., HCI, AI, ethics, philosophy, law, religion, etc.), and your scholarly or professional experience with topics relating to the theme (250 word limit)

- Workshop Goals: A statement about your motivation for participating in this workshop, the issue(s) on which you are most focused, and what you hope to gain from the experience. (500 word limit)

- Workshop Contribution: A short statement about how you expect to contribute to the workshop and enhance the experience for other attendees. (500 word limit)

Optional:

- A position paper of up to ten pages (plus references) in the ACM single-column format. We plan to publish proceedings on ArXiv.

Workshop Goals

The workshop’s primary goals are:

- Share State-of-the-Art Research: Present and discuss recent advances in AI-augmented reasoning and human-AI interaction. This includes new techniques, tools, and methodologies developed to enhance critical and reflective thinking.

- Identify Challenges and Opportunities:

Highlight the current challenges, limitations, and potential risks associated with AI-augmented reasoning systems. Discuss opportunities for future research and development. - Interdisciplinary Collaboration:

Foster collaboration between researchers from AI, HCI, cognitive science, social science, and other relevant fields to create a multidisciplinary approach to developing AI-augmented reasoning systems. - Ethical and Social Implications:

Delve into the ethical and social issues surrounding AI-augmented reasoning like accidental overreliance, and potential misuses of modeling user reasoning. - Design Principles and Guidelines:

Develop design principles and guidelines for creating AI-augmented reasoning systems that prioritize human agency, autonomy, and long-term learning. Consider the balance between AI assistance and human decision-making. - Evaluation Methods:

Discuss and propose effective methods for evaluating the effectiveness of AI-augmented reasoning systems. This includes metrics for assessing improvement in critical thinking skills and the impact on decision-making quality.

Topics

Topics of interest include but are not limited to:

- AI-based reasoning interventions and critical thinking support

- Studies on misinformation related to AI and mitigation

- Political/Democratic reasoning

- Argument mining and argument synthesis

- Fact-checking, attention nudging and information validation

- Human-computer interaction (HCI) methods that boost reasoning

- User modeling and information delivery

- Human-AI Interaction methods for critical thinking

- Wearable systems for cognitive support

- Cognitive theories of reflection and intuitive decision making

Workshop Organizers

Valdemar Danry

MIT Media Lab, United States

Pat Pataranutaporn

MIT Media Lab, United States

Christopher Cui

University of California, San Diego, United StatesJui-Tse (Ray) Hung

Georgia Institute of Technology, United StatesLancelot Blanchard

MIT Media Lab, United States

Zana Buçinca

Harvard University, United StatesChenhao Tan

University of Chicago, United StatesThad Starner

Georgia Institute of Technology, United States

Pattie Maes

MIT Media Lab, United StatesKey Dates

Position paper submission deadline:

Sunday, March 2nd 2025 AoE

Extended to: Sunday, March 9, 2025 AoENotification of acceptance:

Monday, March 24th 2025

Note: We have received three times the number of expected sign ups and are waiting for confirmation from CHI. We thank everyone for there interest in this important topic.Workshop date:

Saturday, April 26th 2025Accepted for Presentation

Delia Deliu

Ram Priyadharshini Ramachandran

Reza Habibi

Thomas Krämer

Nick Byrd

Chengbo Zheng

Huiyun Tang

Dinithi Dissanayake

Chitralekha Gupta

Sasha Mitts

Anastasia SergeevaAccepted for Posters

Steve Huntsman

Naile B. Hacioglu

Hana Zaydens

Charlotte Zhu

Xiangrong Zhu

Jingwei Sun

Yu Zhang

Yi Wen

Rune M Jacobsen

Park Sinchaisri

Chaeyeon Lim

Manni Cheung

Shravan Chaudhari

Yui Kondo and Kevin Dunell

Katelyn Xiaoying MeiAll Accepted Participants

Emails have been sent out with the options for in-person waitlist or virtual participation.